AUTHOR Christina Sol Content Manager

While not directly involved in the product or strategy side, Christina leverages her writing skills and audience insights to communicate Retently’s vision and values. She creates engaging narratives around customer experiences, case studies, success stories and blog articles, making complex CX concepts relatable.

Are your surveys yielding questionable data? The issue might lie in bad survey questions. From biases to respondent confusion, these flawed questions can skew results and invalidate your data.

This article breaks down typical mistakes, showing you how to spot and eliminate problem areas to enhance the quality of your surveys.

Stick around, and let’s get your survey game on point.

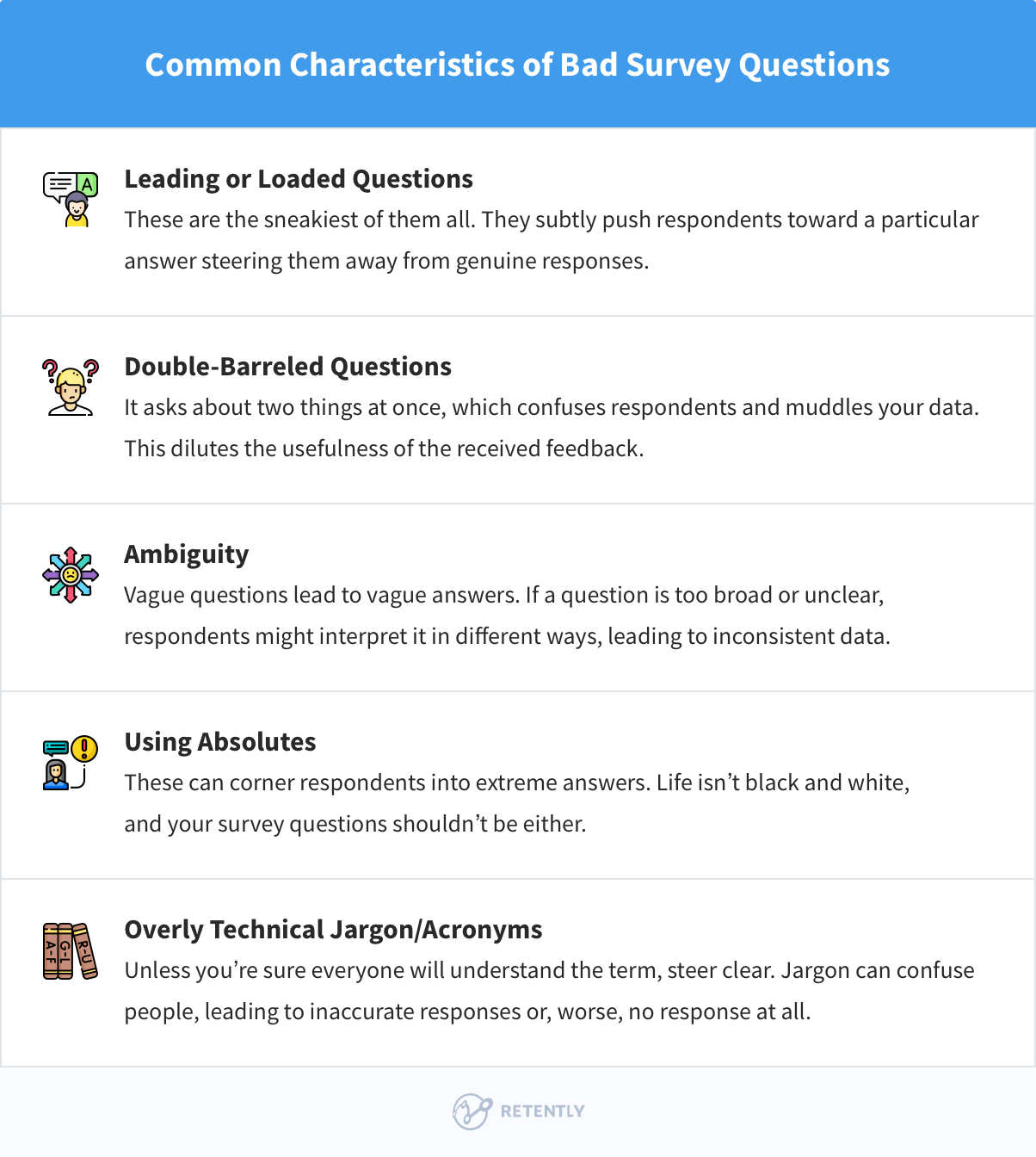

Alright, let’s get into the nitty-gritty of what makes a survey question a “bad” one. You might think a bad question is simply one that’s hard to understand, but it goes much deeper than that. A bad survey question is essentially any question that fails to collect clear, unbiased, and actionable data. These questions can throw off your data’s accuracy and, let’s be honest, waste everyone’s time.

How come? Bad survey questions can wreak havoc on your data quality. If your questions are unclear or biased, the answers you get won’t reflect the true opinions or experiences of your respondents. This results in unreliable data, which can throw off your entire analysis and lead to poor decision-making.

But it doesn’t stop there. Bad survey questions also negatively impact the respondent’s experience. Imagine trying to answer a question that doesn’t make sense or feels intrusive – it’s frustrating, right? When people encounter such questions, they’re more likely to abandon the survey entirely or rush through it without giving thoughtful answers. This means you lose valuable insights and the time you spent creating the survey goes to waste.

Moreover, poor survey design undermines the reliability of your results. If respondents are confused or annoyed, their responses will be inconsistent. This makes it hard to draw any meaningful conclusions from your data, leaving you with a flawed understanding of the issue at hand.

Navigating the path to collecting high-quality data through surveys can be challenging, with many potential pitfalls that can compromise your results. Understanding what makes a survey question bad is the first step toward avoiding these pitfalls and ensuring your surveys are effective and insightful.

Stick around, and soon, you’ll be spotting bad survey questions from a mile away and crafting questions that get you the clear, actionable data you need.

Ready to dive deeper into what not to do?

Navigating the minefield of survey question design isn’t as daunting as it sounds. Let’s break down some of the top mistakes to avoid, starting with one of the biggies: leading questions.

Leading questions are the smooth talkers of the survey world – they subtly push respondents toward a specific answer, often the one the survey creator prefers. L oaded questions are similar to leading questions but carry an additional layer of emotional or biased language, containing implicit assumptions that may skew responses.

Thus, these types of questions have the power to influence how someone responds based on how it’s worded, using subjective descriptors and charged terminology. It contains a built-in assumption that puts pressure on the respondent to answer a certain way. This bias compromises the integrity of the feedback, leading to higher survey abandonment rates and data that does not accurately reflect customer experiences. This can make you think you’re on the right track when you’re actually off course.

Let’s look into several examples:

These examples show how word choice can steer responses in a specific direction, potentially creating a false sense of consensus or satisfaction.

By steering clear of leading or loaded questions, you’re on your way to crafting surveys that give you the real scoop, not just what you might want to hear. Next up, let’s tackle another common mistake that can trip up your survey’s effectiveness: double-barreled questions. Stick around, we’re just getting started on cleaning up those surveys.

Double-barreled questions are like those two-for-one deals that sound good until you realize they’re more confusing than beneficial. These questions ask about two different things at once, forcing respondents to give one answer for two separate issues. This can muddy the waters of your data, making it hard to know which part of the question was addressed in their response.

Ever seen a question like, “How satisfied are you with our pricing and product quality?” or “How satisfied are you with our product quality and customer service?”. This is a classic approach.

This question assumes that one’s opinion on product quality is directly related to their opinion on customer service, which might not be the case. Here are some more examples to consider:

These questions make it impossible to determine which aspect the respondent is commenting on, which dilutes the usefulness of the feedback.

By keeping your questions to the point, you’ll make it easier for respondents to provide clear, useful answers. This clarity will improve the quality of your data while enhancing the respondent’s experience. Up next, we’ll explore the pitfalls of using ambiguous or vague wording.

Ever get a survey question that leaves you pondering, “What do they mean by that?”. If so, you’ve encountered the notorious ambiguous or vague wording in survey questions. This kind of unclear phrasing can lead to a range of interpretations, causing respondents to guess what you’re asking and likely skewing your precious data.

Questions like “Do you regularly use our products?” can be too general. What does “regularly” mean? Every day, once a week, once a month? Clarify it to “How many times a week do you use our product?”. Another example, “Do you think our customer service is good?” is too broad. What exactly does “good” mean here?

Let’s unpack why clear and precise language is crucial and how you can ensure your survey avoids the ambiguity trap:

By tightening up your language and being as specific as possible, you ensure that respondents understand exactly what you’re asking. This clarity leads to more reliable data, making your survey a much stronger tool for decision-making. Up next, let’s tackle how using absolutes in your questions can limit the usefulness of your responses. Stick around for more survey cleanup tips.

When crafting survey questions, throwing in words like “always”, “never”, “all”, or “none” might seem like a good way to gauge strong opinions or behaviors. However, using absolute terms can actually backfire by forcing respondents into a corner. These words can lead to skewed responses that don’t accurately reflect the nuances of human behavior or opinion. Life isn’t black and white, and your survey questions shouldn’t be either.

Absolute terms eliminate any middle ground. They force respondents to either fully agree or disagree, which can be misleading. For many people, answering that they “always” or “never” do something doesn’t quite fit their reality, which might lead them to either abandon the survey or choose an answer that doesn’t accurately reflect their opinions or behaviors. This can distort your data, making it seem like your respondents are more polarized than they actually are. For example, asking “Do you always recycle?” might lead someone who recycles frequently but not always to answer no, which doesn’t accurately reflect their generally positive behavior toward recycling.

By moving away from absolutes and embracing more graded or open-ended questions, you make your survey more respondent-friendly while enhancing the quality and reliability of the data you collect. Coming up next, let’s dive into how jargon and acronyms can alienate respondents, and how you can keep your survey accessible to all.

Dropping technical jargon or acronyms into your survey questions is like speaking in code – only a select few will understand, and you’ll leave everyone else feeling a bit lost. This can alienate or confuse respondents who might not be familiar with the specific lingo of your field, leading to incorrect answers or causing them to drop out of the survey altogether.

Using specialized terminology assumes that all your respondents have the same level of knowledge or background, which is rarely the case.

By ensuring your survey speaks the language of your respondents, you make it more accessible and increase the likelihood of accurate responses.

Navigating survey design can be tricky, and even when you’ve managed to avoid major mistakes, there are still other pitfalls that can compromise your data quality. Let’s explore a couple more areas where survey designers often stumble.

In crafting survey questions, it’s important to be mindful of cultural and personal biases that can inadvertently influence how questions are framed. This can lead to responses that are not truly reflective of the respondent’s opinions, or even offensive.

To prevent this, before finalizing your survey, have individuals from different backgrounds review it to catch any potentially biased or sensitive phrasing. Avoid words that carry strong emotional implications or cultural biases. Stick to factual and unbiased language. Also, make sure to frame questions in a way that allows for any experience to be valid.

Avoid idiomatic expressions or references that might not translate well across cultures. Instead of saying, “Rate your experience from 1 to 10, with 10 being over the moon,” use, “Rate your experience from 1 to 10, with 10 being extremely satisfied.”

Scaling in surveys refers to the set of answer options provided for a question. Errors in scaling can lead to confusion among respondents and difficulties in analyzing the data. Some common scaling errors to look into:

Match the scale to the variability you expect in answers. A 1-5 scale might be sufficient for a straightforward satisfaction question, but a 1-10 scale could be better for more nuanced feedback. Additionally, provide clear definitions for scale points, especially for subjective measures. Explain what each number in the scale represents to avoid different interpretations.

Navigating the diverse waters of a target audience requires a personal touch – tailoring your survey to fit the unique traits of different segments. By refining your questions to resonate with specific groups, you’ll get responses that are not only more relevant but also richer in detail. Using personalization as a tool can significantly boost response rates and uncover valuable insights.

Your survey should be just the right length, like a trip that’s long enough to reach the destination but not so long that participants lose interest. Focus on the essential questions to keep the survey short while still gathering valuable insights. People’s attention and the quality of their responses usually start to drop off really quickly, with an attention span of 8,25 minutes , so it’s important to be mindful of the survey’s length.

To collect crucial information before respondents get too tired, place open-ended questions at the end of your survey. This way, participants can give thoughtful answers to these more demanding questions after they’ve tackled the simpler ones.

As we dive deeper into the digital age, it’s more important than ever to design surveys that work smoothly on all kinds of devices. Think of a responsive layout as a well-paved road that makes the drive easy and enjoyable. This not only keeps everything clear but also shows you value the respondent’s time and comfort. By minimizing scrolling and cutting out clunky features on mobile devices, surveys become more user-friendly and less intimidating, which can boost completion rates.

It’s important to thoroughly test your survey across various platforms to ensure everyone has the same great experience, no matter the device they’re using.

By avoiding these additional pitfalls, you can further enhance the reliability and validity of your survey results. Each step you take to remove bias and confusion not only improves the quality of your data but also respects and values the diversity of your respondents.

For more context, let’s analyze a poorly designed employee satisfaction survey to highlight specific mistakes.

1. “How often do you feel valued at work?”

2. “Don’t you think our new office layout is great?”

3. “How satisfied are you with the company’s communication and team collaboration?”

4. “Why do you believe our team meetings are ineffective?”

5. “How would you rate the company’s ERP system’s integration with other software?”

6. “How satisfied are you with your job? Very Satisfied, Satisfied, Neutral, Dissatisfied.”

In these examples, you can see how bad survey questions can creep into your surveys and how to fix them. This understanding will help you design better surveys that yield more accurate and useful data.

Creating effective survey questions is both an art and a science. It requires careful consideration, testing, and refinement. Here are some tips to ensure your survey questions are of the highest quality and capable of gathering the insightful data you need.

Conducting a self-review is the first step in identifying bad survey questions and therefore improving their quality. Here are some techniques you can use to help you evaluate your questions effectively:

Pre-testing, also known as piloting, is a critical step in survey design. It involves running your survey with a small, representative group before the full launch. This process can unearth issues with question clarity, formatting, and overall flow that you might not have noticed.

First off, it helps you spot any confusing questions that might trip up your respondents. Plus, it lets you check if all your questions actually make sense for the people you’re asking. You can also tweak the wording based on feedback to make everything crystal clear. And, it’s great for figuring out if your survey is too long, so people don’t get tired out while answering. With pre-testing, you can fine-tune your survey to be easy to understand, relevant, and just the right length for the best results.

Many frameworks and tools are available to help you craft effective survey questions. These resources can help you avoid common pitfalls and adhere to best practices in survey design. Specialized survey software offers templates and tips for designing effective questions and can automate much of the survey process.

Iterative feedback involves continuously collecting and incorporating feedback throughout the survey development process, not just during the pre-testing phase.

Here are the key steps for implementing it:

This careful and thorough approach ensures that your surveys are not only well-received but also yield valuable insights that can guide meaningful decisions and strategies.

Think of survey logic like a GPS for your questions, making sure respondents only go down the roads that matter to them and answer questions relevant to their experiences. Skip logic is like a shortcut, making the survey quicker and more fun by letting people skip questions that don’t relate to them. Branching logic personalizes the survey journey for everyone, leading to more accurate and focused data.

It’s like modern map-makers carefully planning out the best route for your survey.

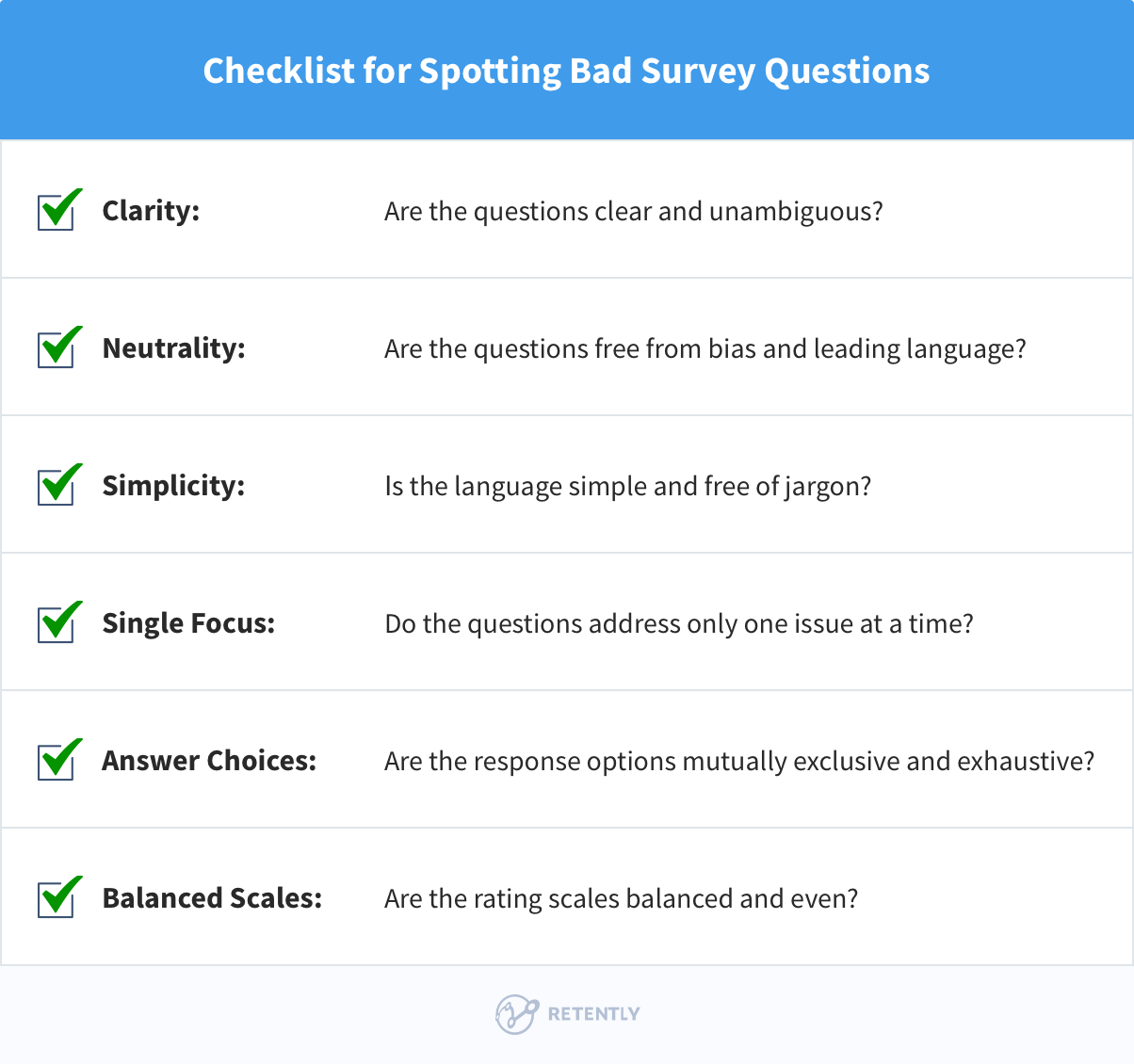

Now that you have a good understanding of what makes a survey question good or bad, it’s time to put this knowledge into practice. Take a moment to review your current surveys and look for common mistakes.

Here’s a simple checklist to help you spot and fix bad survey questions:

Apply the strategies discussed to improve the clarity, neutrality and overall quality of your questions. The effort you put into designing better surveys will pay off with more reliable data and valuable insights.

Create your free Retently account – no long-term obligation or credit card is required. Leverage the available templates, automation features, and detailed reports to ensure your surveys remain effective, engaging, and capable of providing the valuable insights you need to make informed decisions.